Click to listen to a NotebookLM audio overview of this article:

Most AI platforms are optimized for speed, not security.

They generate answers fast but can’t link to the documents where those answers come from.

They store your prompts but don’t tell you where that data lives or who can access it. They don’t isolate data for each customer and case which can cause sensitive data from one case leaking into a completely unrelated case.

They summarize documents but don’t understand legal nuance.

When it comes to personal injury law, it’s a liability due to the data sources like:

- Medical records

- Billing histories

- Insurance claim files

- Settlement negotiations

- HIPAA-protected health information

You’re already on the hook to protect it. But if your AI tools aren’t designed for legal use, you may be exposing yourself in ways that are hard to detect (and even harder to explain later).

In this article, we’ll break down why most AI tools fall short on security, what legal-grade AI actually looks like, and how Supio is designed differently from the ground up.

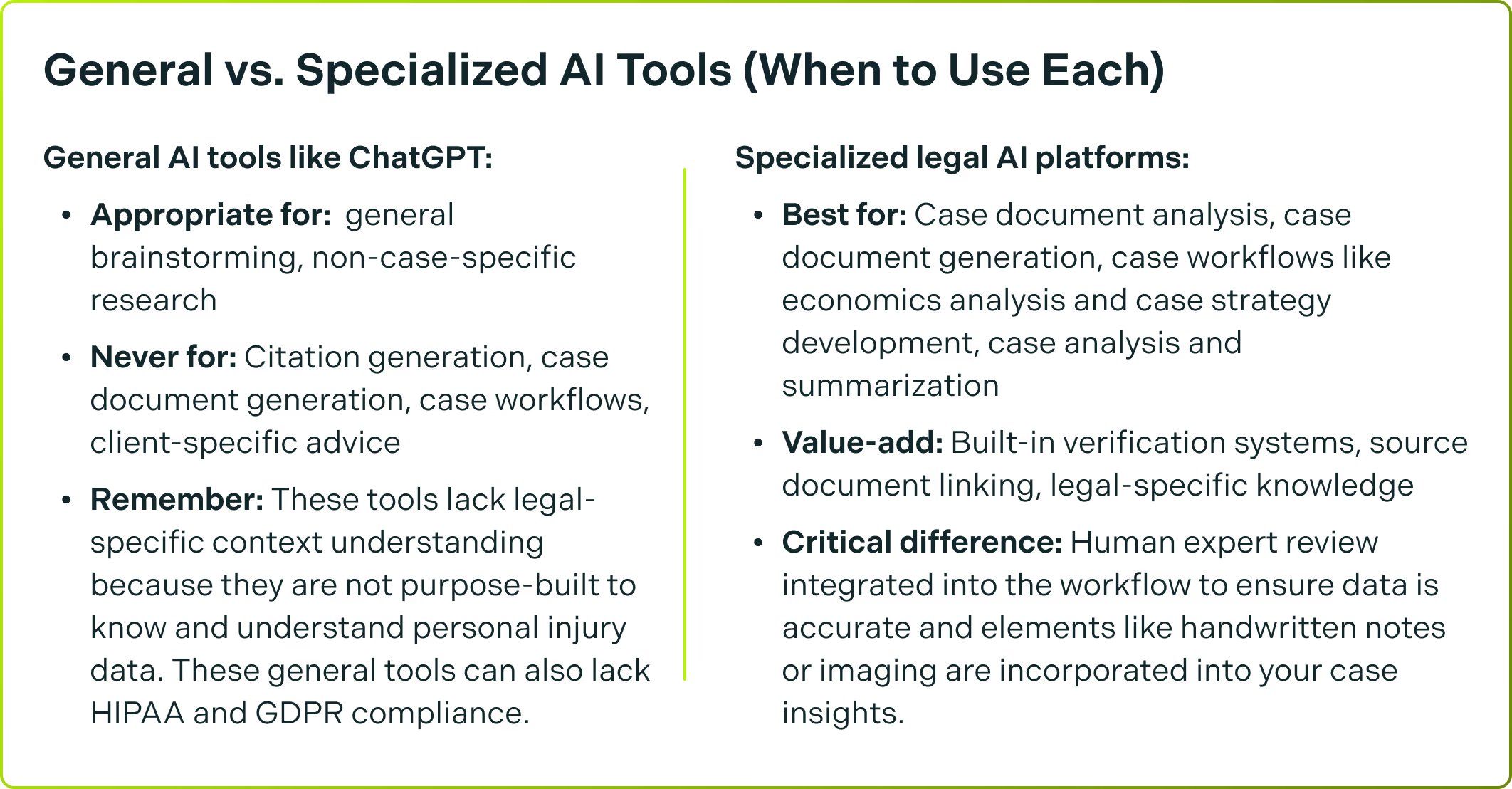

The vast majority of AI tools aren’t built for PI

AI platforms are often built for speed and scale, not legal-grade confidentiality.

This includes ChatGPT, Claude and other general-purpose tools. Here’s where that disconnect shows up.

1. Weak access controls

Generic tools often lack robust permissioning. Even some “secure” platforms make it hard to answer questions like:

- Who accessed this case file last week?

- Did that engineer still have access after they left?

- Can we trace how this data got modified?

SOC2 audits are a must-have Internal access controls (like offboarding and credential hygiene) matter just as much. And in many legal tech companies, they’re still a work in progress.

2. No client-level data separation

Storing data securely is one thing. Keeping it logically isolated is another.

Supio doesn’t just separate data at the firm level - we isolate it at the case level.

That means:

- No accidental spillover between unrelated cases

- Tighter internal controls for your staff

- Less risk of cross-contamination in sensitive litigation

It’s a small design decision with big downstream consequences.

3. Legal blind spots in general-purpose AI

Even if the tool is secure, you still have to worry about what it does with the data.

General-purpose tools like ChatGPT and Claude don’t specialize in legal documents and it shows.

They often:

- Misread ICD codes

- Skip over critical billing gaps

- Suggest treatments for deceased clients

- Summarize notes without citing any source

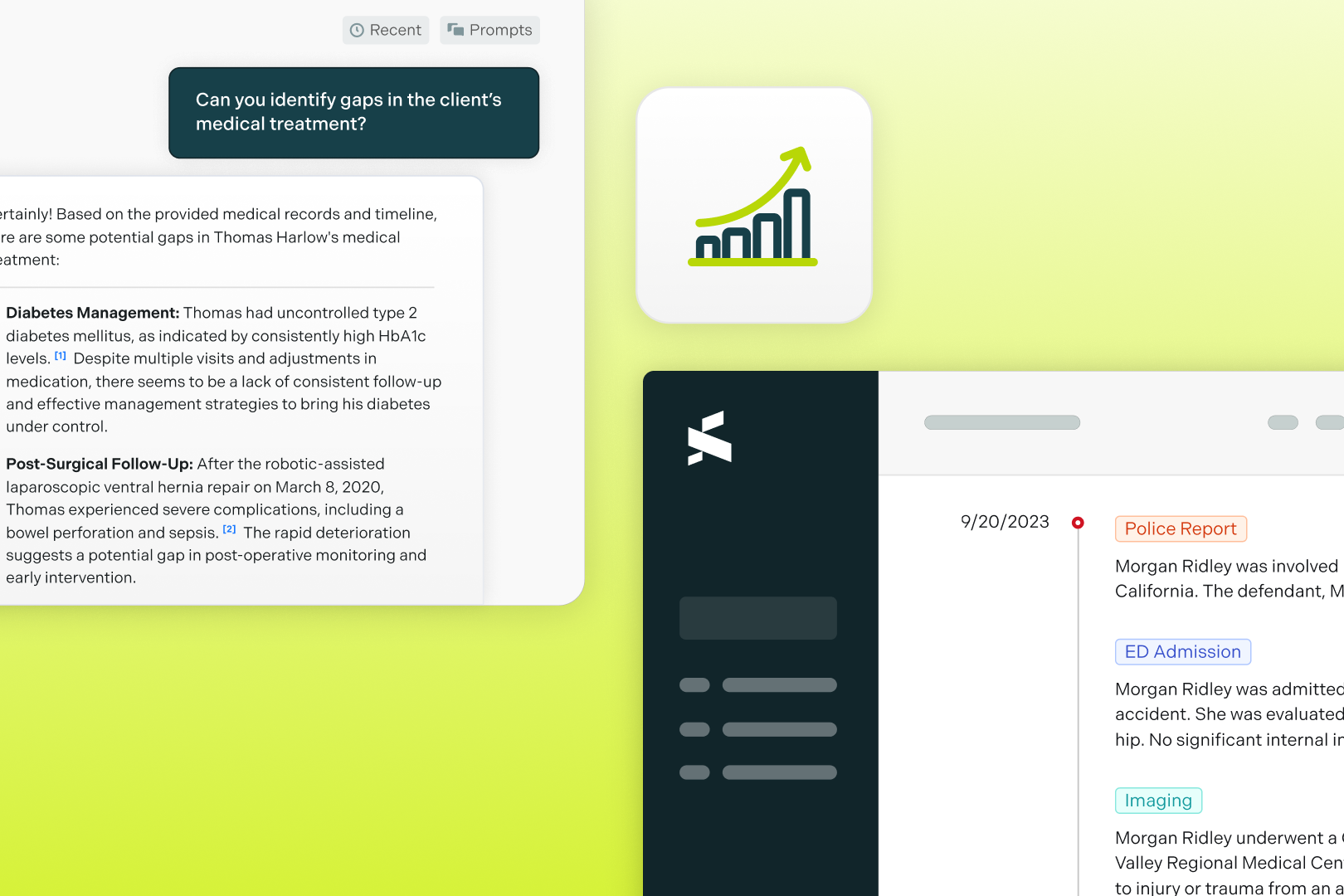

Supio’s AI Assistant understands medical chronologies, causation, and document citation →

Supio’s AI is built for litigation-grade output.

Every fact links back to the record and every summary is built on traceable evidence.

You’re getting a documented answer instead of a guess.

When general purpose AI tools are used to generate filings or legal arguments, the consequences can be severe.

Recent examples:

- June 2023: A personal injury attorney used ChatGPT to prepare a filing against Avianca Airlines and cited at least six cases that didn’t exist. The incident triggered a sanctions hearing and led one federal court to require lawyers to certify whether AI was used in filings.

- February 2024: A Vancouver lawyer came under review after submitting ChatGPT-generated case law in a child custody dispute. The case law, intended to support her argument for taking her client’s children overseas, turned out to be entirely fictional.

The takeaway:

These tools don’t understand the stakes of PI law cases and don’t carry your professional liability.

If your firm is using generic AI to draft deposition questions, prep demands, or summarize case data, the risk isn’t hypothetical. It’s already showing up in court.

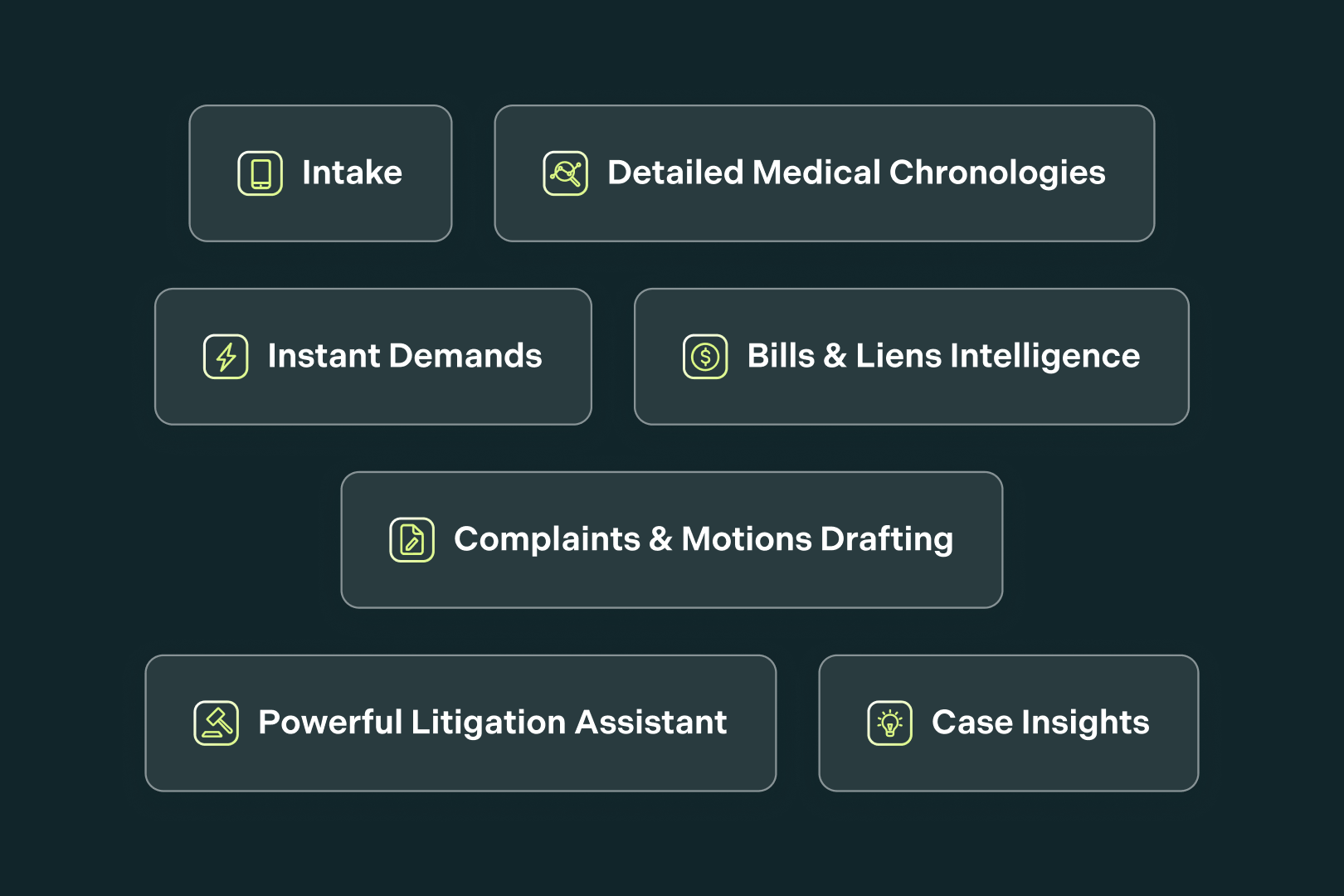

What a PI-ready AI platform should include

Here’s what to look for if you want security that holds up:

- End-to-end encryption

(Not just in transit. At rest, in storage, everywhere.)

- HIPAA-compliant infrastructure

(Not just a sticker - actual, audit-traceable controls.)

- Role-based access controls

(Set permissions by role, not just by account.)

- Case-level data isolation

(Minimize the blast radius of any mistake.)

- Audit logs

(Know who accessed what, and when.)

- Transparent data handling

(No silent training on client files.)

- Legal-context understanding

(The AI should understand causation, treatment gaps, and how to surface leverage and not just summarize text.)

Why AI + Human Review is the right answer to this problem

Generic AI tools give you raw output and leave you to figure out what’s correct. Supio works differently.

In this quick video, we break down how our hybrid system blends software, AI, and expert human review to create a continuous feedback loop.

Final thoughts → security isn’t a bonus feature.

AI is here to stay in PI law. But most tools treat your data like content instead of evidence.

If your AI can’t protect your clients’ files with the same discipline you’re expected to, then don’t use it.

And the defense won’t care that you used “industry standard” tools if the wrong file leaks.